Digital Cameras

Digital Cameras

The concepts of digitizing images on scanners and converting video signals to digital precede the concept of taking fixed frames thus digitizing signals from an array of discrete sensor elements. Eugene F. Lally, of the Jet Propulsion Laboratory, published the first description of how to produce troll photos in a digital domain using a mosaic photosensor.2 The purpose was to provide navigation information to astronauts on board during space missions. The mosaic matrix periodically recorded still photos of the locations of stars and planets during transit, and when approaching a planet provided additional information on distances for orbiting and as a guide for landing.

The concept included design elements that foreshadowed the first digital camera.

Texas Instruments

Texas Instruments designed an analog camera without a film in 1972, but it is not known if it was finally built. The first digital camera registered was developed by the company Kodak, which commissioned the construction of a prototype to engineer Steven J. Sasson in 1975.

This camera used the then new CCD sensors developed by Fairchild Semiconductor in 1973. His work resulted in a camera of approximately 4 kg. that took black and white photos with a resolution of 0.01 megapixels. He used the innovative CCD solid state chips. The camera recorded the images on a cassette tape and it took 23 seconds to capture its first image, in December 1975. This prototype camera was a technical exercise, not intended for production.

The first digital camera that managed to be produced was the Cromemco Cyclops, which was launched in 1975. The Cyclops had an MOS sensor with a resolution of 0.001 megapixels.

Analog Electronic Cameras

Handheld electronic cameras, in the sense of a device made to be carried and used as a film handheld camera, appeared in 1981 with the Sony Mavica (magnetic video camera) demonstration. This model should not be confused with the most modern Sony cameras that also use the name of Mavica.

This was an analog camera based on television technology that recorded on a “video floppy disk” one inch by two. Essentially it was a video camera that recorded still images, 50 per disk in field mode and 25 per disk in frame mode. The quality of the image was considered equal to that of the televisions of the time.

Analog Cameras

Analog electronic cameras do not seem to have reached the market until 1986 with the Canon RC-701. Canon showed this model at the 1984 Olympics, printing the images in newspapers. Several factors delayed the widespread adoption of analog cameras: the cost (above $ 20,000), poor image quality compared to the film, the lack of quality printers.

Capturing and printing an image originally required access to equipment as a frame grabber, which was beyond the reach of the average consumer. Video discs later had several reader devices available for viewing on a screen, but were never standardized to the momentum of computers.

The first to adopt them tended to be of the news media, where the cost was exceeded by the utility and the ability to transmit images over telephone lines. The poor quality was compensated by the low resolution of the newspaper graphics. This ability to transmit images without resorting to satellites was useful during the Tiananmen protests of 1989 and the first Gulf war in 1991.

The first analog camera for sale to consumers may have been the Canon RC-250 Xapshot in 1988. A notable analog camera produced in the same year was the Nikon QV-1000C, which sold approximately 100 units and recorded images on scales of gray, and the quality of newspaper printing was equal to film cameras. In appearance it resembled a modern SLR digital camera.

The arrival of fully digital cameras

The first fully digital camera that recorded images in a computer file was the Cyclops camera, introduced in 1975 by Cromemco.4 The Cyclops camera used an MOS sensor and could be connected to any microcomputer using the S-100 Bus. The first digital camera with internal storage was the Fuji DS-1P model, created in 1988, which was recorded on a 16 MB internal memory card. Fuji showed a prototype of the DS-1P model, but this camera was not released.

The first digital camera available for use with the IBM PC and the Macintosh computer was the Dycam Model 1, launched in 1991, which was also sold under the name Logitech Fotoman. He used a CCD sensor, digitally recorded the images, and had a connection cable for direct download to the computer.5 6 7

Kodak

In 1991, Kodak launched its DCS-100 model, the first of a long line of Kodak SLR professional cameras that were based, in part, on film cameras, often Nikon. It used a 1.3 megapixel sensor and sold for about $ 13,000.

The transition to digital formats was aided by the formation of the first JPEG and MPEG standards in 1988, which allowed image and video files to be compressed for storage. The first camera aimed at consumers with a liquid crystal display on the back was the Casio QV-10 in 1995, and the first camera to use CompactFlash memory cards was the Kodak DC-25 in 1996.

The market for digital cameras aimed at the consumer was originally formed by low-resolution cameras. In 1997 the first cameras for consumers of a megapixel were offered. The first camera that offered the ability to record video clips may have been the Ricoh RDC-1 in 1995.

In 1999 with the introduction of the Nikon D1, a 2.74 megapixel camera, which was one of the first digital SLRs, the company became an important manufacturer, and, with an initial cost of less than $ 6,000, was affordable for both photographers professionals as for high profile consumers. This camera also used Nikon F lenses, which meant that photographers could use many of the same lenses they already had for their film cameras.

In 2003, Canon Digital Rebel was introduced, also known as the 300D, a camera aimed at consumers of 6 megapixels and the first DSLR that cost less than $ 1,000.

In 2008, a medium format LEICA camera with a resolution of 37 megapixels was presented at the German Fair.

Image resolution

The resolution of a digital camera is limited by the camera sensor (usually a CCD or a CMOS Sensor) that responds to light signals, replacing the work of the film in traditional photography.

The sensor is made up of millions of “cubes” that are loaded in response to light. Generally, these cubes respond only to a limited range of light wavelengths, due to a color filter over each.

Each of these cubes is called a pixel, and a mosaicism and interpolation algorithm is used to join the image of each wavelength range per pixel into an RGB image where the three images per pixel are to represent a complete color.

Canon

Canon SX30 1 / 2.3 “CCD Camera Sensor (6.17 x 4.55mm)

CCD devices transport the load through the chip to an analog-digital converter. This converts the value of each of the pixels into a digital value by measuring the load that reaches it. Depending on the number of bits of the converter we will obtain an image with a greater or lesser color range. For example, if a bit were used we would have values of 0 and 1, and we could represent presence or absence of light, which would be a pure black and white image.

On the other hand, CMOS devices contain several transistors in each pixel. The digital conversion process occurs in the sensor structure itself, so an added converter is not needed. Its manufacturing process is simpler, and makes cameras that use this technology cheaper.

The resulting number of pixels in the image determines its size. For example, an image 640 pixels wide by 480 pixels high will be 307,200 pixels, or approximately 307 kilometers; An image of 3872 pixels high by 2592 pixels wide will be 10,036,224 pixels, or approximately 10 megapixels.

According to the photographic experience of professionals in this field, they affirm that a chemical photograph taken by a compact camera would result in a 30 megapixel photograph.

Image quality

The pixel count is commonly the only thing shown to indicate the resolution of a camera, but this is a misconception. There are several factors that affect the quality of a sensor. Some of these factors include sensor size, lens quality, pixel organization (for example, a monochromatic camera without a

Bayer filter tile has a higher resolution than a typical color camera) and The dynamic range of the sensor.

Many digital compact cameras are criticized for having too many pixels in relation to the small size of the sensor they incorporate.

The increase in pixel density decreases the sensitivity of the sensor. Well, each pixel is so small that it picks up very few photons, and in order to preserve the signal-to-noise ratio, the sensor should be illuminated more. This decrease in sensitivity leads to noisy frames, poor shadow quality and generally poor quality images if they are poorly lit.

Pixel cost: Projection of pixels per dollar.

While the technology has been improving, the costs have decreased dramatically.

Digital Camera

Measuring the price of the pixel as a basic measure of value for a digital camera, there has been a continuous and constant increase in the number of pixels purchased for the same amount of money in the new cameras that matches the principles of Moore’s law. This predictability of camera prices was first presented in 1998 at the Australian PMA DIMA conference by Barry Hendy and designated the “Hendy’s Law” .8

Methods to capture images At the heart of a digital camera is a CCD image sensor.

Since the first digital cameras were introduced to the market, there have been three main methods of capturing the image, depending on the hardware configuration of the sensor and the color filters.

Camera Sensor

The first method is called a single shot, in reference to the number of times the camera’s sensor is exposed to the light that passes through the lens. Single-shot systems use a CCD with a Bayer filter, or three independent image sensors (one for each of the additive primary colors: red, green, and blue) that are exposed to the same image by an optical system. image separation

The second method is called multishot, because the sensor is exposed to the image in a sequence of three or more apertures of the lens shutter.

There are several methods of applying this technique. The most common was originally to use a single image sensor with three filters (again red, green and blue) placed in front of the sensor to obtain the additive color information. Another multi-shot method uses a single CCD with a Bayer filter but moves the physical position of the sensor in the plane of the lens focus to compose a higher resolution image than what the CCD would otherwise allow.

A third version combines the two methods without a Bayer filter on the sensor.

The third method is called scanning because the sensor moves through the focal plane like the sensor of a desktop scanner. Its linear or tri-linear sensors use only a single line of photosensors, or three lines for all three colors. In some cases, scanning is accomplished by rotating the entire camera; A camera with a rotating line offers very high resolution images.

The choice of the method for a given capture, of course, is largely determined by the subject to be photographed. It is generally inappropriate to attempt to photograph a subject that moves with anything other than a single shot system. However, with scanning or multi-shot systems, you get the highest color fidelity and larger sizes and resolutions. This makes these techniques more attractive for commercial photographers who work with photographs of still subjects in large format.

Digital Photography

Recently, drastic improvements in single-shot cameras and image RAW processing have made single-shot cameras, based on CCD almost entirely predominant in commercial photography, not to mention digital photography as a whole. Single-shot cameras based on CMOS sensors are usually common.

Mosaics, interpolation, and aliasing of the filter. The Bayer array of color filters an image sensor.

In most digital cameras of today’s consumer, a Bayer filter mosaic is used, in conjunction with an anti-aliasing optical filter to reduce aliasing due to reduced sampling of the different primary-color images. A chromatic interpolation algorithm is used to interpolate color information to create a complete array of RGB image data. Cameras that use a 3CCD monostable beam-splitter approach, three-filter multi-shot approach, or the Foveon X3 sensor does not use anti-aliasing filters, nor chromatic interpolation.

Raw Software

The firmware on the camera, or a software in a raw converter program such as adobe raw camera, interprets the raw information of the sensor to obtain a complete color image, because the RGB color model requires three Intensity values for each pixel: one for each for red, green, and blue (other color models, when used, also require three or more values per pixel). A single sensor element cannot simultaneously record these three intensities, and so a color filter array (CFA) must be used to selectively filter a particular color for each pixel.

The Bayer filter pattern is a repeating pattern of the 2 × 2 mosaic of light filters, with green ones in opposite corners and red and blue in the other two positions. The high part of green takes advantage of the characteristics of the human visual system, which determines brightness above all of the green and is far more sensitive to brightness than to hue or saturation. A 4-color filter pattern is sometimes used, often involving two different shades of green. This provides a potentially more accurate color, but requires a slightly more complicated interpolation process.

The color intensity values not captured for each pixel can be interpolated (or conjectured) from the values of adjacent pixels representing the color that is calculated.

Connectivity: Most digital cameras can be connected directly to the computer to transfer their information. In the past, cameras had to be connected through a serial port. USB is the most widely used method although some cameras use a FireWire or Bluetooth port. Most cameras are recognized as a USB storage device. Some models, for example, the Kodak EasyShare One can connect to the computer via a wireless network using the 802.11 (Wi-Fi) protocol.

A common alternative is the use of a card reader that may be able to read various types of storage media, as well as transfer data to the computer at high speed. The use of a card reader also prevents the camera battery from being discharged during the download process, as the device takes power from the USB port.

An external card reader allows adequate direct access to images in a collection of storage media. But if it only works with a storage card, it can be inconvenient to move back and forth between the camera and the reader. Many modern cameras offer the PictBridge standard, which allows data to be sent directly to printers without the need for a computer.

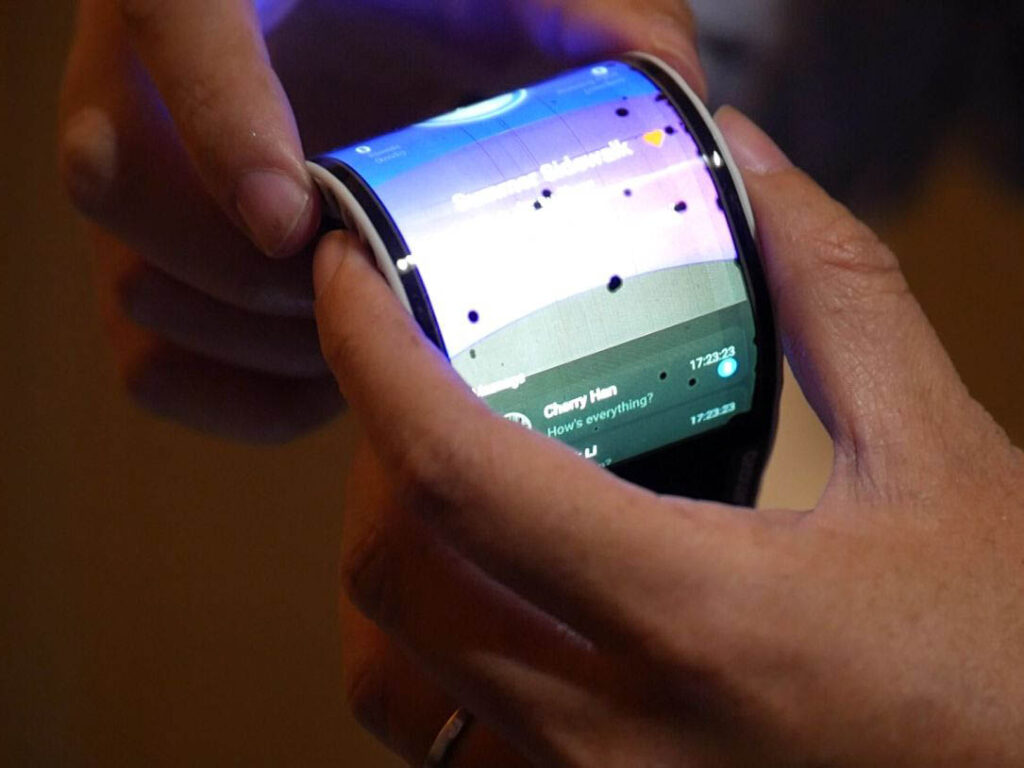

Integration: Current technology allows the inclusion of digital cameras in various daily devices such as cell phones. Other small electronic devices (especially those used for communication) such as PDA devices, laptops and smartphones often contain integrated digital cameras. In addition, some digital camcorders incorporate a digital camera.

Due to the limited storage capacity and the emphasis of the utility on the quality of these integrated devices, the vast majority use the JPEG format to save the images since their great compression capacity compensates for the small loss of quality that it causes.

Image storage

Digital cameras on cell phones or low-priced cameras use built-in memory or flash memory. Memory cards are commonly used: CompactFlash (CF), Secure Digital (SD), xD and Memory Stick cards for Sony cameras. Previously, 3 1/2 “discs were used for image storage.

The photos are stored in standard JPEG files or in TIFF or RAW format to have a better image quality despite the large increase in file size. Video files are commonly stored in AVI, DV, MPEG, MOV, WMV, etc.

Digital Cameras

Almost all digital cameras use compression techniques to make the most of storage space. Compression techniques usually take advantage of two common characteristics in photographs: patterns: in an image it is very common to find areas where the same color (or the same sequence) appears repeated several times (for example, a white wall). These types of areas can be encoded so that the storage space needed for them decreases. This type of compression does not usually achieve large percentages of decrease.

irrelevance: just as mp3 encoding takes advantage of the inability of the auditory system to detect certain sounds (or the absence of these), in digital cameras you can use a compression that consists in eliminating information that the camera has captured, but that The human eye will be unable to perceive.

Memory cards: CompactFlash Cards / Microdrives: typically higher professional cameras. Microdrives are real hard drives in the CompactFlash form factor. The adapters allow the use of SD cards in a Compact Flash device.

Memory Stick: a type of proprietary flash memory manufactured by Sony. The Memory Stick can vary in 4 ways: the M2 is used in both Sony Ericsson cell phones and Sony digital cameras; the PRO Duo, the PRO and the Duo. Some Memory Sick cards may have MagicGate.

SD / MMC: a small-sized flash memory card that is gradually supplanting CompactFlash. The original storage limit was 2 GB, which is being supplanted by 4 GB cards. 4GB cards are not recognized in all cameras as a review was made to the SD standard as SDHC (high capacity SD). The cards also have to be formatted in the FAT32 file format while many older cameras use FAT16 that has a partition limit of 2 GB.

SD HDSC: New format of SD ~ 4GB: only some new cameras are compatible with this system; ensures faster data transfer.

MiniSD Card: (a little less than half) a smaller card used in devices such as cameras on mobile phones.

MicroSD Card: even smaller than mini SD (less than a quarter) version of the SD card. Used in mobile phones that incorporate functions such as camera, MP3, etc.

XD card: created by Fuji and Olympus in 2002, a smaller format than an SD card.

SmartMedia: A now obsolete format that competed with CompactFlash, and was limited to 128MB capacity. One of the main differences was that SmartMedia had the memory controller integrated in the reading device, while in CompactFlash it was on the card. The xD type card was developed as a replacement for SmartMedia.

Samsung Galaxy Note 9

Samsung is incredibly prolific on its cutting-edge smartphone technology production, by not only producing some of the best flagships out there, but also other options for more specific needs, and this is the case with its Galaxy Note 9.

In terms of phone and computational capabilities, the Galaxy Note 9 is impressive, and whether you decide to get this one, a S9 or a S10; is a decision that requires to consider many factors. But, as a smartphone camera alone, the Samsung Galaxy Note 9 has quite good features.

The latest Samsung smartphones are doing a very good job by mixing the best of camera hardware and lenses, with AI and computational photography and post-processing; and this is also the case with the Samsung Galaxy Note 9.

It features a dual 12-megapixel rear camera, which uses technology to change between apertures depending on light conditions. While its secondary back camera gives you the possibility to take photos with a 2x optical zoom. This might not sound like a big number, but it’s a telephoto lens, which offers closer images without losing much quality.

The Galaxy Note 9 also comes with enhanced scene-optimizer capabilities, which automatically adjusts colors and the white balance, and does this by determining the subjects in the image. It might get hard to notice the improved details, but they are there.

Choosing between the latest Samsung’s flagship or the latest Galaxy Note will depend a lot on what you would like to use your modern smartphone for. Galaxy Note 9’s camera is great, but you should definitely consider the other advantages of a Galaxy Note before going for this one.

Sony Xperia 1

Sony has a been a firm competitor in the realm of professional photography, with its excellent DSLRs and point & shoots alike. But, in the world of smartphone cameras, their Xperia series has never disappointed when it comes to image, photo and video.

A lot has been said in regards to phone cameras, always paying special attention to the photography capabilities; finding that some are more powerful in terms of camera hardware, while others leverage software and AI to process great images.

In the realm of smartphone cameras for video and cine, not much has been said. This is why the Sony Xperia 1 has a well-deserved place for those video and film aficionados and pros alike. It’s an interesting bet to feature great smartphone videography, and we will explore it.

First, its aspect ratio is quite unusual, but great for making videos. It features a 21:9 screen, making it way longer than other flagships. And its screen features a 4K resolution, notch-free and HDR.

What makes the Sony Xperia 1 so good for those who want to record great videos with a smartphone, is the fact that it gives a lot of control for videographers, with manual control. It also produces videos that can be perfectly enhanced later with post-processing tools.

There are weak points such as a not so great battery life. But, overall, for those wanting to have a great phone, made by a brand with a long photography and video tradition; and especially, those wanting to produce great video with a smartphone, the Sony Xperia 1 is a great choice